Using AI Models like OpenAI GPT in Kitchn

This recipe lets you leverage an AI model like OpenAI’s GPT-4 in Kitchn

This recipe gives you a simple example of how to use an AI model like OpenAI’s GPT-4 in the Kitchn platform to perform various tasks. You could use this as a basis to integrate into a creative testing workflow i.e. to generate headlines.

Prerequisites

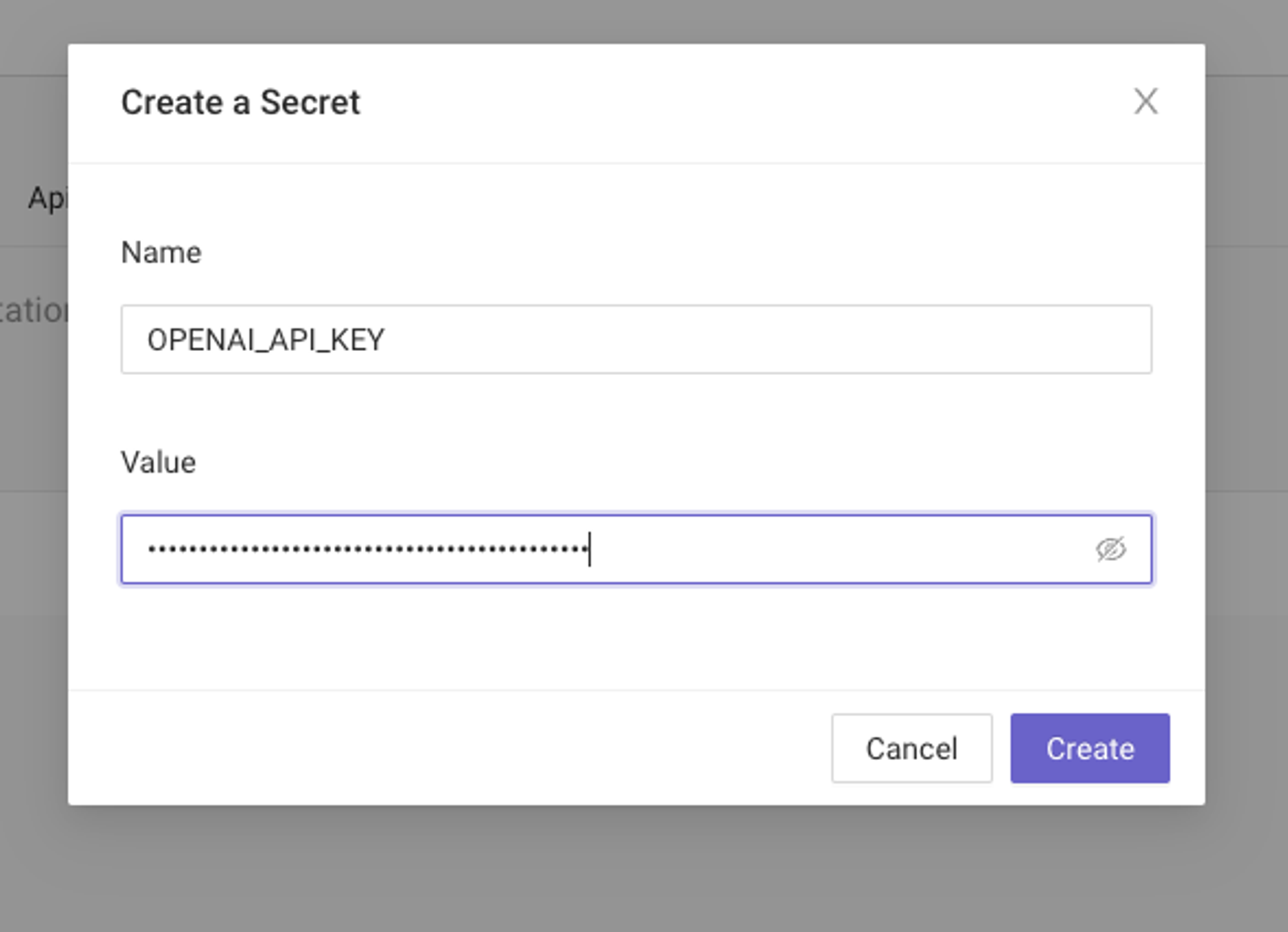

In order for this recipe to work, you need to store your OpenAI API Key securely in Kitchn as a secret. Refer to this documentation to learn how to do it.

JSON Recipe

Import the automation recipe(s) into your own account. Don’t know how? Learn here.

Automation Recipe “OpenAI in Kitchn”

{"name":"OpenAI in Kitchn","description":"A blank recipe","nodes":{"start_1":{"type":"start","logging_enabled":false,"error_rules":[],"x":1096,"y":362},"stop_1":{"type":"stop","logging_enabled":false,"error_rules":[],"x":1653,"y":724},"input_string_1":{"type":"input_string","logging_enabled":false,"error_rules":[],"x":257,"y":590,"group":"group_1","inputs":{"string":{"title":"openai_model","description":"A string to input","export_enabled":true,"data":"gpt-4"}},"outputs":{"string":{"title":"open_ai_model","description":"A string to input"}}},"input_string_2":{"type":"input_string","logging_enabled":false,"error_rules":[],"x":250,"y":935,"group":"group_1","inputs":{"string":{"title":"prompt","description":"A string to input","export_enabled":true,"data":"Generate a headline for my product called \"Cool Sunglasses\" to use in a Facebook ad:"}},"outputs":{"string":{"title":"prompt","description":"A string to input"}}},"input_string_3":{"type":"input_string","logging_enabled":false,"error_rules":[],"x":259,"y":755,"group":"group_1","inputs":{"string":{"title":"system_message","description":"A string to input","export_enabled":true,"data":"You are a helpful marketing assistant. Respond in a casual tone."}},"outputs":{"string":{"title":"system_message","description":"A string to input"}}},"post_url_v4_1":{"type":"post_url_v4","logging_enabled":false,"error_rules":[],"x":1654,"y":524,"group":"group_2","inputs":{"url":{"export_enabled":true,"data":"https://api.openai.com/v1/chat/completions"},"headers":{"export_enabled":true,"data":[{"type":"Bearer","config":{"token":"{{secrets.OPENAI_API_KEY}}"}}]}}},"input_any_1":{"type":"input_any","logging_enabled":false,"error_rules":[],"x":264,"y":406,"group":"group_2","inputs":{"any":{"title":"openai_chat_completion_template","description":"The any to input","export_enabled":true,"data":{"model":"gpt-3.5-turbo","messages":[{"role":"system","content":"You are a helpful assistant."},{"role":"user","content":"Hello!"}]}}},"outputs":{"any":{"title":"openai_chat_completion_template","description":"The any to input"}}},"patch_json_v3_1":{"type":"patch_json_v3","logging_enabled":false,"error_rules":[],"x":1353,"y":519,"group":"group_2","inputs":{"patches":{"export_enabled":true,"data":[{"op":"replace","path":"/messages/1/content","value":"{{inputValue(\"\")}}"}]},"data":{"title":"openai_chat_completion_template","description":"The data the patches are applied to"},"value":{"title":"prompt","description":"A value that can be used in patches"}},"outputs":{"data":{"title":"openai_chat_completion_template","description":"The patched data"}}},"extract_v2_1":{"type":"extract_v2","logging_enabled":false,"error_rules":[],"x":1929,"y":533,"group":"group_2","inputs":{"pointer":{"export_enabled":true,"data":"/choices/0/message/content"},"default_value":{"export_enabled":true}},"outputs":{"data":{"title":"result","description":"The extracted data"}}},"patch_json_v3_2":{"type":"patch_json_v3","logging_enabled":false,"error_rules":[],"x":768,"y":516,"group":"group_2","inputs":{"patches":{"export_enabled":true,"data":[{"op":"replace","path":"/model","value":"{{inputValue(\"\")}}"}]},"value":{"title":"model","description":"A value that can be used in patches"},"data":{"title":"openai_chat_completion_template","description":"The data the patches are applied to"}},"outputs":{"data":{"title":"openai_chat_completion_template","description":"The patched data"}}},"patch_json_v3_3":{"type":"patch_json_v3","logging_enabled":false,"error_rules":[],"x":1064,"y":517,"group":"group_2","inputs":{"patches":{"export_enabled":true,"data":[{"op":"replace","path":"/messages/0/content","value":"{{inputValue(\"\")}}"}]},"value":{"title":"system_message","description":"A value that can be used in patches"},"data":{"title":"openai_chat_completion_template","description":"The data the patches are applied to"}},"outputs":{"data":{"title":"openai_chat_completion_template","description":"The patched data"}}}},"edges":[{"id":"input_string_2.string:patch_json_v3_1.value","points":[]},{"id":"patch_json_v3_3.data:patch_json_v3_1.data","points":[]},{"id":"patch_json_v3_1.data:post_url_v4_1.body","points":[]},{"id":"post_url_v4_1.body:extract_v2_1.data","points":[]},{"id":"post_url_v4_1.posted:extract_v2_1.extract","points":[]},{"id":"extract_v2_1.extracted:stop_1.stop","points":[]},{"id":"input_any_1.any:patch_json_v3_2.data","points":[]},{"id":"input_string_1.string:patch_json_v3_2.value","points":[]},{"id":"patch_json_v3_2.data:patch_json_v3_3.data","points":[]},{"id":"patch_json_v3_3.patched:patch_json_v3_1.patch","points":[]},{"id":"patch_json_v3_1.patched:post_url_v4_1.post","points":[]},{"id":"start_1.start:patch_json_v3_2.patch","points":[]},{"id":"patch_json_v3_2.patched:patch_json_v3_3.patch","points":[]},{"id":"input_string_3.string:patch_json_v3_3.value","points":[]}],"groups":{"group_1":{"title":"User Inputs","description":"","x":974,"y":496,"inputs":["input_string_1.string","input_string_3.string","input_string_2.string"],"outputs":["input_string_1.string","input_string_2.string","input_string_3.string"]},"group_2":{"title":"Call OpenAI API","description":"","x":1309,"y":506,"inputs":["patch_json_v3_1.value","patch_json_v3_2.value","patch_json_v3_2.patch","patch_json_v3_3.value"],"outputs":["extract_v2_1.extracted","extract_v2_1.data"]}},"widgets":{"widget_1":{"title":"Prompt","description":"A string to input","position":0,"input":"input_string_2.string"},"widget_2":{"title":"OpenAI Model","description":"A string to input","position":0,"options":[{"data":"gpt-3.5-turbo","title":"gpt-3.5-turbo"},{"data":"gpt-4","title":"gpt-4"}],"input":"input_string_1.string"},"widget_3":{"title":"System Message","description":"A string to input","position":0,"input":"input_string_3.string"}},"variables":{},"config":{"capture_inputs_enabled":false,"caching_enabled":false},"tags":[]}How To

Step 1 - Inputs

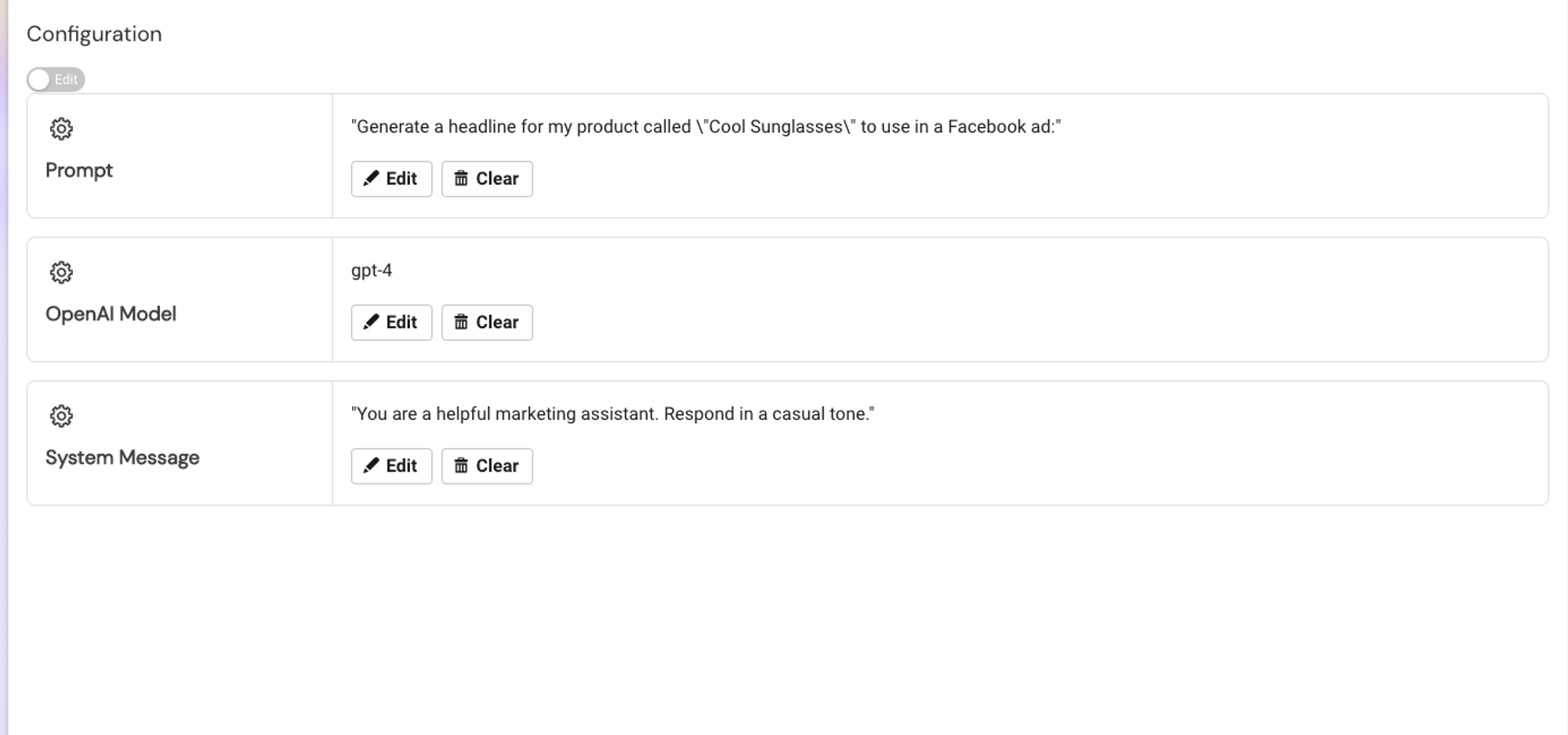

To configure the automation based on your needs, enter a prompt that you want the AI to work on, choose a model (i.e. gpt-3.5-turbo or gpt-4) - making sure your OpenAI account has access to the model, and adapt the system message.

Step 2 - Run the Automation

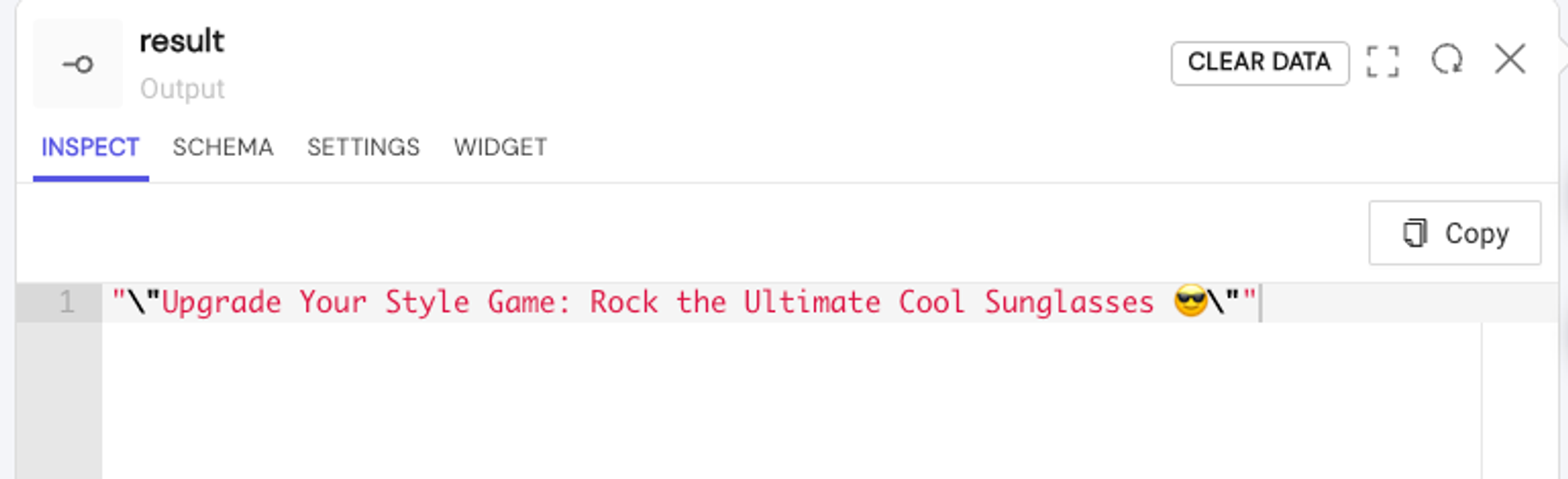

Click on Run Automation to pass the inputs to the OpenAI API and then observe the result:

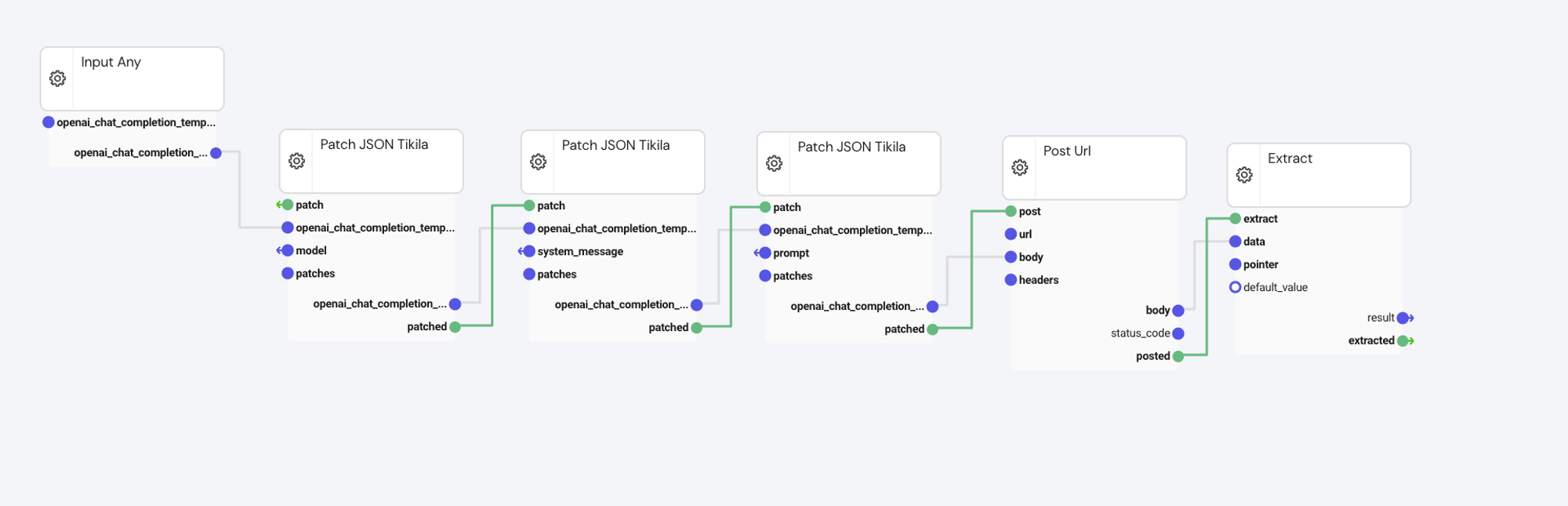

How this automation works under the hood

This automation takes your inputs (prompt, model, system message) and combines them into the required data format to pass to the OpenAI API using a series of OLD Patch JSON (TiKiLa) nodes.

{ "model": "gpt-4", "messages": [ { "role": "system", "content": "You are a helpful marketing assistant. Respond in a casual tone." }, { "role": "user", "content": "Generate a headline for my product called \"Cool Sunglasses\" to use in a Facebook ad:" } ] }

It then calls the OpenAI Chat Completion endpoint https://api.openai.com/v1/chat/completions using your OpenAI API Key (see prerequisites) to generate a response.